Overall Graphics Quality:

One of the things that has been talked about in the build up to BioShock's release was the game's support for DirectX 10. Well, it's true that there is a DirectX 10 version of the game, and if you have a graphics card that supports DirectX 10 and are running Windows Vista, the game will use the DirectX 10 codepath automatically.If you're running either a DirectX 9.0c compliant graphics card, or Windows XP, this option will be disabled by default and the game will run on the DirectX 9.0c (Shader Model 3.0) codepath. What's interesting is the fact that 2K decided to drop support for Shader Model 2.0 hardware, with the minimum requirement being an SM3.0 capable graphics card.

If you're wondering what that means, you're out of luck if you're running a video card from ATI's Radeon X800 family, but you're in luck if you're running a GeForce 6-series graphics card. Any ATI graphics cards released after the X800/X850 series (i.e. the X1000-series) support Shader Model 3.0, so there will be no issues running the game.

Before we get onto the differences between the DirectX 10 and DirectX 9.0 codepaths though, let's have a look at overall graphics quality.

There are three graphics presets available in BioShock – high, medium and low. The high preset curiously isn't maximum quality as global lighting is set to "off" when you set the graphics slider to "high". Additionally, the low setting isn't the minimum settings either – this time global lighting is enabled (rather bizarrely) as is DirectX 10 detail surfaces when you're running the DirectX 10 codepath. Obviously, if you're running under Windows XP, or running a DX9 graphics card under Windows Vista, this option will be disabled.

Users are also spared of the "low" texture detail setting when you use the low graphical preset, but if your graphics card isn't up to running the medium texture setting we take pity on you and you can force it to low yourself – just don't tell anyone you did, though. Obviously, you can tweak individual graphics settings to your tastes, but the following screenshots should give you an idea of the differences in quality between the various presets.

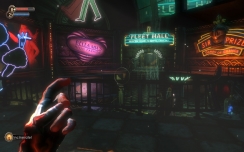

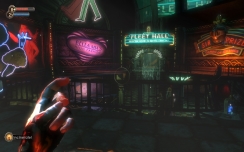

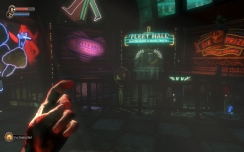

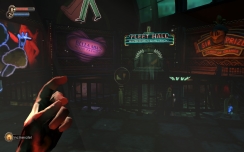

In this screenshot, there is very little difference between "maximum" and "high" quality, but the difference in lighting between "high" and "medium" is huge – the game looks so much moodier at the higher quality settings. The big difference between "medium" and "low" is the lack of shadows and distortion in the water and the only real difference between "low" and the minimum settings is the texture quality.

The global lighting setting makes a difference in our third comparison between "maximum" and "high" quality settings – the colours look more vibrant on the Games of Chance sign. The light reflections on the character's hand are also a little less washed out with global lighting enabled.

At medium detail, the lighting looks quite different again – this time it's more washed out than the high quality setting and the individual colours aren't as vibrant. Additionally, the water doesn't look quite as real; instead, it looks like there's just a sheet of something that is supposed to look like water falling down from the ceiling in front of the Fleet Hall sign. The difference between medium and low quality is that the scene looks much darker overall and the contrast between colours is even less prominent.

Let's have a look at some DX9 vs. DX10 comparisons...

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.